Microsoft has launched the generative AI mannequin Phi-4 with absolutely open weights on the Hugging Face platform. Since its presentation in December 2024, Phi-4 has garnered consideration for its enhanced efficiency in mathematical computations and multitask language understanding, whereas requiring fewer computational assets than bigger fashions.

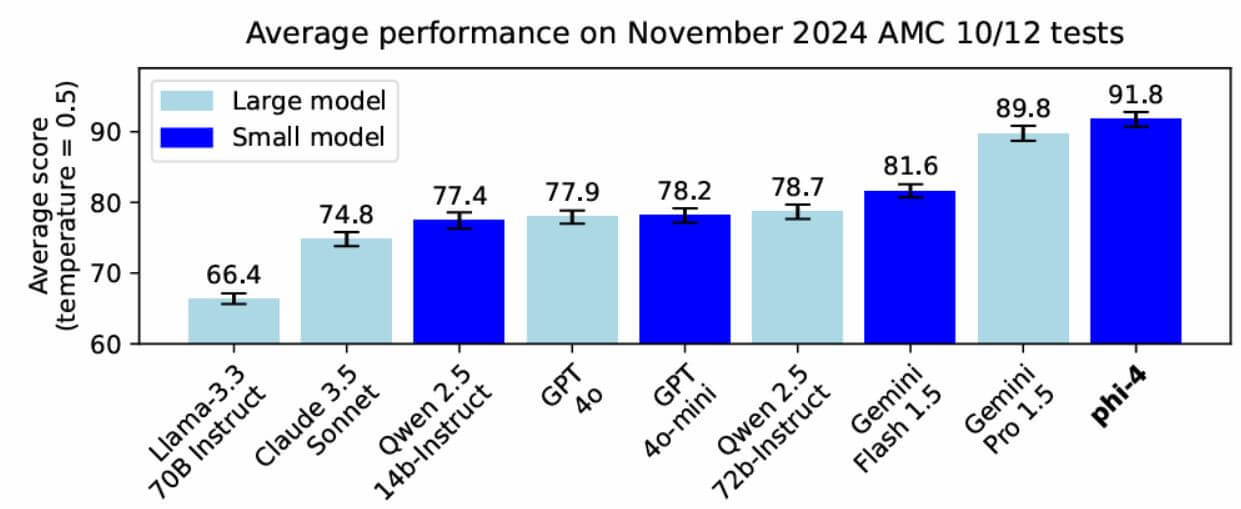

Phi-4, boasting 14 billion parameters, is designed to compete with fashions like GPT-4o mini, Gemini 2.0 Flash, and Claude 3.5 Haiku.

This Small Language Mannequin (SLM) is optimized for advanced mathematical calculations, logical reasoning, and environment friendly multitasking. Regardless of its smaller dimension, Phi-4 delivers excessive efficiency, processes lengthy contexts, and is good for functions that demand precision and effectivity. One other standout function is its MIT license, permitting free use, modification, and distribution, even for industrial functions.

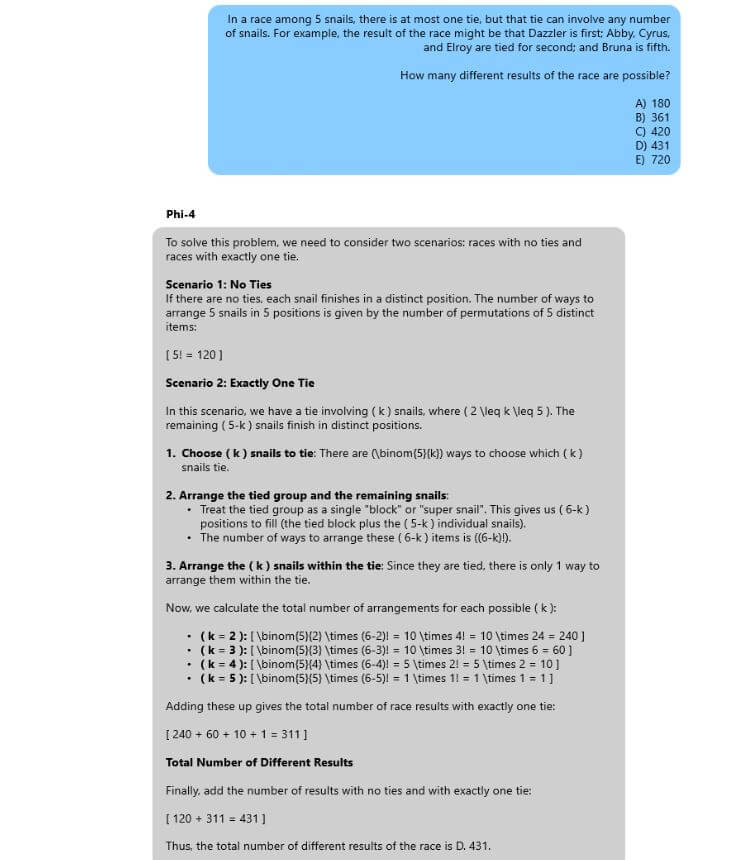

Microsoft has additional enhanced the mannequin utilizing artificial knowledge and fine-tuning methods, bettering its accuracy in duties requiring reasoning. An instance of Phi-4’s mathematical reasoning capabilities is demonstrated within the determine under.

In April 2023, Microsoft launched Phi-3 Mini, the primary within the Phi-3 collection of small language fashions. It featured 3.8 billion parameters and was educated on a smaller dataset in comparison with bigger fashions like GPT-4. This was adopted in August by Phi-3.5 fashions, together with Phi-3.5-vision and Phi-3.5-MoE, which utilized artificial knowledge and filtered public datasets, supporting contexts as much as 128,000 tokens. This evolution of small mannequin growth culminated within the launch of Phi-4.

Initially, Phi-4 was accessible by way of the Azure AI Foundry platform. Now, Microsoft has launched Phi-4 on the Hugging Face platform with open weights underneath an MIT license. Phi-4 can also be accessible by way of Ollama.

Phi-4 excels in a number of key areas. It outperforms bigger fashions in mathematical computations, together with fixing advanced issues, making it very best for functions that demand high-precision calculations. Additionally it is extremely environment friendly in multitasking and logical reasoning, helps long-context processing, and achieves excessive efficiency with restricted computational assets. These options make Phi-4 a wonderful selection for integration into scientific and industrial tasks requiring each precision and useful resource optimization.

By making Phi-4’s weights publicly accessible, builders can freely combine it into their tasks and customise it for particular duties, considerably increasing its potential functions throughout varied fields.

For extra particulars on the technical specs, we suggest reviewing the full technical report on arXiv.